In the AI and machine learning domain, data annotation acts as the bedrock for success. Accurate labels convert raw data into usable insights for AI engines. However, attaining accuracy in data annotation can be quite challenging. Even small mistakes can greatly disrupt the functioning of your systems.

It goes without saying that 80% of data scientists’ time is spent on preparing and cleaning data, emphasizing the critical role of accurate labeling. For Chief Data Officers, this underscores the need for a robust data annotation strategy. Let’s now consider five proven strategies and explore actionable steps to improve data labeling precision.

1. Build a Team of Skilled and Specialized Annotators

Data annotation isn’t a one-size-fits-all task. It requires domain knowledge and technical expertise. Employing annotators who specialize in your industry ensures that even nuanced data points are labeled with accuracy.

Take healthcare, for example. Annotators working on medical datasets must understand terminology, anatomy, and diagnostic categories. Without this knowledge, labels could be misclassified, jeopardizing the effectiveness of AI models in patient diagnosis.

To elevate accuracy, seek out annotators with a track record of working on projects similar to yours. Additionally, evaluate their ability to adapt to your specific annotation guidelines.

2. Train Annotators with Tailored Guidelines and Examples

Even experienced annotators need clear direction to deliver consistent results. Training programs should include:

- Annotation Protocols: Detailed guidelines that define what each label represents and how it should be applied.

- Real-World Scenarios: Practical examples that help annotators navigate ambiguous cases.

- Quality Standards: Benchmarks that outline acceptable accuracy levels and common pitfalls to avoid.

For instance, training annotators on ecommerce datasets might involve examples of how to label product images with variations in angles or lighting. Regular workshops and feedback loops ensure that annotators stay aligned with project goals and refine their skills over time.

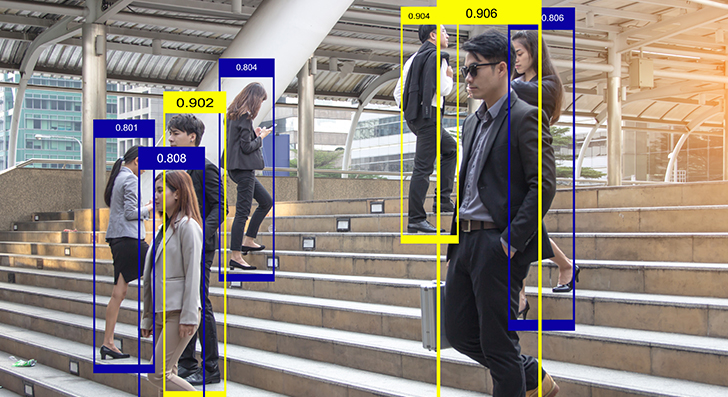

3. Combine Automated Tools with Human Oversight

While automation can expedite the annotation process, human oversight is crucial for nuanced tasks. Advanced tools equipped with AI can handle repetitive tasks, such as identifying objects in clear images. However, for subjective tasks like sentiment analysis, human expertise is indispensable.

The Dual Approach

- AI Tools: Automate initial labeling and flag anomalies for review.

- Human Reviewers: Validate the annotations, especially for complex or ambiguous data points.

This hybrid approach not only accelerates workflows but also ensures that your datasets maintain the accuracy needed to train high-performing AI models.

4. Use Benchmarks to Set Consistency Standards

Creating a “gold standard” or benchmark dataset provides annotators with a clear reference for quality. This dataset acts as a foundation for comparison, helping annotators align their work with project expectations.

For example, a gold standard in a social media sentiment analysis project might include pre-labeled tweets categorized as positive, negative, or neutral.

Annotators can check their results against this reference to make sure they are meeting the required level of accuracy.

Consistency is the main advantage of this approach, as it reduces divergences between annotators and ensures uniformity across the dataset.

5. Regularly Audit Data and Provide Feedback

Quality assurance doesn’t stop at the annotation stage. Conducting periodic audits helps identify errors and patterns that may compromise your dataset’s integrity. By sampling annotated data at intervals, you can pinpoint recurring issues and rectify them before they escalate.

Feedback Mechanism

- Highlight common errors and share corrective measures.

- Recognize well-executed annotations to motivate annotators.

- Use audit results to further finetune annotation guidelines.

An iterative feedback loop fosters a culture of continuous improvement, ensuring that every project phase benefits from the lessons learned in previous stages.

Build High-Performing AI Engines with Super Accurate and High-Quality Data Sets.

Additional Best Practices to Elevate Accuracy

- Define Deliverables Clearly

Ensure that annotators have a clear understanding of what constitutes “complete and correct” annotations. Providing precise definitions and examples can reduce confusion and errors. - Balance Speed with Quality

Avoid pressuring annotators to prioritize speed at the expense of accuracy. Allocate sufficient time and resources to each task to ensure the highest quality outputs. - Partner with Proven Annotation Providers

Reputable data annotation services providers come equipped with experienced teams and well-defined workflows. They can handle large-scale projects efficiently while maintaining quality, making them an ideal partner for businesses aiming for excellence.

Techniques to Strengthen Quality Assurance

Quality assurance methods can elevate your data annotation efforts by introducing structure and measurable benchmarks. Here are some proven techniques:

- Sample-Based Review

Randomly review a subset of annotated data to identify errors. This sampling method is cost-effective and helps maintain consistent quality across large datasets. - Consensus-Based Annotation

Engage multiple annotators to work on the same data points and compare their results. Differences in labeling can generate discussions, ultimately refining annotation standards and reducing bias. - Statistical Validation

Employ tools like Fleiss’ Kappa or Cronbach Alpha to measure inter-annotator agreement. These metrics quantify consistency and highlight areas where guidelines may need adjustment. - Iterative Refinement

After every audit, update the annotation protocols and retrain annotators. This iterative process keeps the team aligned with project goals and improves overall performance.

The Role of AI Tools in Enhancing Annotation

AI is transforming data annotation, introducing speed, accuracy, and scalability that were previously unattainable with manual processes alone. Here’s how AI-powered tools are reshaping the landscape:

- Automated Pre-Annotation

AI tools can handle the initial stages of annotation by labeling data automatically based on predefined patterns and algorithms. Human annotators then refine these labels, focusing on nuances that require contextual understanding. This dual approach minimizes repetitive tasks and allows annotators to concentrate on areas where human judgment is crucial. - Intelligent Quality Assurance

AI systems excel at spotting errors in annotated datasets. They can flag inconsistencies, detect mislabeled data, and recognize patterns that deviate from the defined standards. By automating quality checks, these tools ensure a higher level of consistency and significantly reduce the time spent on manual reviews. - Adaptive Learning for Continuous Improvement

AI tools equipped with machine learning capabilities improve over time by learning from human corrections. For instance, when annotators adjust automated labels, the system adapts and refines its future outputs. This creates a feedback loop that increases accuracy with every iteration, making the annotation process more efficient and reliable. - Enhanced Scalability

Scaling data annotation efforts to meet project demands can be daunting. AI-powered tools simplify this process by handling massive datasets with ease. Whether your project involves millions of images or complex text analysis, these tools can process large volumes quickly, ensuring deadlines are met without compromising quality.

The Bottom Line

You can greatly improve annotation accuracy by putting together a skilled team, offering customized training, utilizing cutting-edge tools, and enforcing strong quality assurance practices.

Collaborating with a reliable data annotation services provider ensures access to expertise and efficient workflows, positioning your projects for success. Remember, accurate annotations are not just a technical necessity—they’re a strategic advantage.

Ready to take the next step? Start by evaluating your current annotation processes and identifying areas for improvement. With the right strategies and tools, you can create high-quality datasets that drive exceptional performance.