Artificial intelligence is transforming software development and product lifecycle as we know it. However, it also brings data security, model protection, and regulatory compliance challenges. Traditional security frameworks fail to address these challenges for AI-powered systems, urging organizations to think fast.

Data exposure, ethical concerns, and the demand for quicker time-to-market require new approaches. Organizations must rethink security strategies and integrate compliance into AI development right from the start. This blog describes the key considerations, including data and model security, regulatory compliances, and best practices, to ensure AI innovations remain secure and compliant.

Table of Contents

The Evolving Threat Landscapes for AI-Driven Software

Ensuring Secure AI Product Development: Exploring Practical Strategies

The Evolving Threat Landscapes for AI-Driven Software

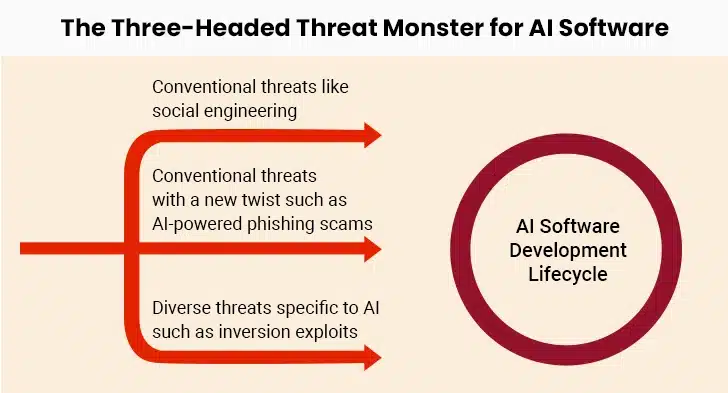

As businesses speed to generate value from AI-powered software, many are neglecting a crucial aspect: security and compliance. According to IBM IBV, only 24% of the entire AI projects have security components, although 82% of respondents agree that trustworthy and secure AI is essential for business success. This is indeed a scary situation where businesses fail to realize that they are facing a three-headed monster.

Conventional threats, such as social engineering and malware, persist and need the same due diligence. Secondly, enterprises lacking a robust security and compliance layer are ill-prepared to face conventional threats laced with AI. Consider phishing emails for instance. With AI, cybercriminals create far more targeted and effective emails. IBM Security teams have found that with AI capabilities, the time required to craft an effective phishing email can be reduced by 99.5%. Last but not least, we have a new breed of threats such as prompt injections, model extraction and aversion attacks, and adversarial threats. Though not widespread yet, these will proliferate exponentially as the market for AI-driven tools, software, products, and systems expands.

Key Security Considerations

Securing the AI product pipeline starts with updating the GRC or governance, risk, and compliance strategies. Inducing these principles right from the beginning and considering them as the core accelerates a secure and compliant product innovation. A design and governance-oriented approach toward AI software development is of utmost important, especially in case of emerging AI regulations such as EU AI Act and NIST AI framework, along with industry-specific regulations like HIPAA. Enterprises that integrate GRC capabilities with their AI product development can easily clear the path to value.

However, the key question is how to ensure the adoption and integration of these capabilities. To reach the right answer, we have enlisted a series of questions.

- Have you established and communicated guidelines addressing the use of organization data within third-party and public applications?

- Have you conducted threat modeling to manage the threat vectors?

- Have you narrowed down open-source models which are properly scanned, tested, and vetted?

- How are you managing security of the training data workflows, both in transit and at rest?

- What measures are you taking to improve the API and plug-in integration security?

- What is the action plan for models in case of malicious outputs, unexpected behaviors, and security issues?

- Do you have role-based access control, multifactor authentication, and identity federation in place to manage access to training data and models?

- Are you managing compliance with regulations for data security, privacy, and ethical AI utilization?

If you have thorough and well-supported answers to these questions, you’re well-positioned to proceed with your AI product development. If not, you should focus on the next steps.

Ensuring Secure AI Product Development: Exploring Practical Strategies

Technical Safeguards and Controls

Robust model access controls are the foundation of secure AI product development. Organizations should implement role-based permissions, robust authentication, and detailed access logging while monitoring usage patterns.

| Model Access Controls | Implement cryptographic authentication and role-based access |

| Differential Privacy | Secure user data while enabling AI training |

| Runtime Monitoring | Implement AI-specific IDS or intrusion detection systems to detect any malicious inputs |

| Encrypted Computation and Federated Learning | Use decentralized data to train models while ensuring privacy |

Balance AI Innovation with Security and Compliance

Process and Organizational Controls

To achieve success in the AI development project, it is important to have cross-functional AI governance teams with stakeholders from legal, technical, security, and management teams. Regular governance audits ensure complete alignment with organizational goals.

| Cross-Functional Governance Teams | Involve stakeholders from security, legal, and business leaders in AI risk management |

| AI Security and Compliance Training | Train developers on compliance mandates and adversarial AI threats |

| Incident Response Planning | Develop AI-specific security incident response protocols |

| Third-Party AI Component Evaluation | Evaluate AI vendors for the supply chain vulnerabilities and security risks |

Future-Proofing the AI Security and Compliance Strategy

Innovative Technologies and Approaches

AI security automation is gaining traction, while other approaches strengthen the security and compliance strategies to mitigate future challenges.

| AI Security Automation | Self-healing AI systems that detect and remediate security issues autonomously |

| Formal Verification for AI Models | Mathematically prove the correctness and security properties of the AI model |

| Zero-Trust Architectures for AI | Prevent unauthorized data tampering with restricted AI model access |

| Quantum-Resistant AI Security | Prepare for cryptographic changes in AI security |

Developing Adaptive Compliance Capabilities

Organizations can have the upper hand over competitors through regulatory intelligence capabilities. Early awareness of changes in these compliances helps organizations adapt faster and implement the required policies more efficiently while avoiding penalties.

| Regulatory Intelligence | Track and interpret changing compliance requirements with AI-driven tools |

| Flexible Compliance Frameworks | Accommodate new regulations by designing adaptable governance structures |

| Industry Engagement | Collaborate with industry groups, regulatory bodies, and standardization efforts |

| AI Governance Leadership | Ensure active policy participation and contribute to shaping artificial intelligence’s best practices |

End Note

AI is transforming industries in unique ways; however, security and compliance challenges also tag along. Mitigating these challenges calls for certain proactive approaches. With the AI product landscape evolving rapidly, balancing innovation with responsible practices is the need of the hour. Start by assessing the existing AI security level. Deploy governance structures that cover operations and development. Train AI teams on business-specific risks and security strategies.

By partnering with leading AI software development companies, organizations can master these challenges and set to lead the next wave of innovation in artificial intelligence. They’ll become the pioneer in delivering powerful AI software while maintaining compliance, trust, and security. In the future running on AI wheels, this balance will separate leaders from laggards.