The digital economy is in overdrive, generating exabytes of data per day. Businesses are turning to artificial intelligence (AI) and machine learning solutions (ML) to harness the value of this data. These solutions have transformed lives and businesses. From robotic surgery in healthcare to self-driving cars in automotive and precision farming in agriculture, the AI/ML applications are vast and varied. At the core of these applications is the data annotation process.

Data annotation involves adding labels and tags to raw data. This creates foundational datasets that help machine learning algorithms understand their environment and perform desired tasks. Consider the example of an autonomous vehicle learning to navigate. Without annotated datasets, the driverless car algorithm remains blind to its environment. Another example is text analysis. Tags such as names, sentiments, phrases, etc., provide context and help the model comprehend natural language.

Table of Contents

Significance of Data Annotation

Key Data Annotation Challenges

Impact of Annotation Challenges on Businesses

In-House vs. Outsourced Data Annotation

Significance of Data Annotation

Just as humans need teachers, AI/ML algorithms require accurate data annotation to understand their surroundings and perform tasks. High-quality and accurately annotated datasets are continuously fed into machine learning algorithms to train them. The annotated datasets lay the foundation for high-performing AI/ML applications deployed across different industries. Other than training AI/ML models, data annotation is also important in terms of:

1. Handling Multiple Types of Data

Annotations vary depending on the data type used for training machine learning algorithms. These include images, videos, audio, text, and complex data like 3D visuals. For example, AI/ML models used in the healthcare industry are trained using accurately annotated medical images such as CT scans, X-rays, MRIs, etc. Thus, these models can easily detect cancers, tumors, and other anomalies.

2. Improving Model Accuracy

“Garbage in, garbage out” is true in the case of AI/ML models. Inaccurate and poorly annotated training data raises concerns over the model’s reliability and negatively impacts outcomes. Instead, accurate data annotation is the key to building reliable models. For example, accurately annotated objects, such as pedestrians, lanes, traffic signals, etc., help autonomous vehicles navigate the roads safely.

3. Supporting Natural Language Processing (NLP)

Natural language processing (NLP) enables machines to understand and interpret human language. Text data is annotated to help ML algorithms understand natural language and generate human-like responses. That’s how virtual assistants like Alexa, Siri, and Google understand commands and perform actions.

4. Enabling Computer Vision (CV)

Computer vision enables machines to “see” the world as humans do. Visual data, including images and videos, are annotated to help machines detect and identify objects. Computer vision-based models are used for facial recognition, medical imaging, human pose tracking, etc.

Given its importance, data annotation powers diverse AI/ML applications across industries, including security and surveillance, manufacturing, insurance, etc. These algorithms have made businesses more efficient, agile, and smart. No wonder the data annotation tools market is projected to grow to USD 80.97 billion by 2034 at a CAGR of 18.72%.

Even though data annotation is important for training AI/ML models, the process is easier said than done. It is full of challenges that prevent organizations from implementing ML algorithms and improving workflows. Thus, it’s important to know these common challenges and address them.

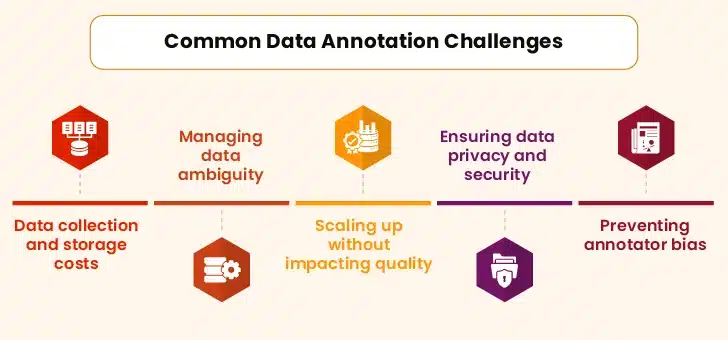

Key Data Annotation Challenges

I. Cost

Constant streams of high-quality data must be fed into machine learning algorithms. Collecting and storing such sheer volumes and variety of data adds to expenses. Additionally, maintaining data quality throughout the process adds up to costs. Not to forget annotation tools, infrastructure, and personnel that stretch thin a company’s budget!

II. Ambiguity

Assigning accurate labels becomes challenging when data has multiple interpretations. There is an increased chance of incorrect labels being assigned to ambiguous data. This negatively impacts the model’s performance and predictions. For example, “I saw a man with binoculars” can mean that the speaker saw a man through the binoculars. It can also mean that the speaker saw a man holding the binoculars.

III. Scalability

Data annotation in machine learning becomes more complex as the volume of data increases. Scaling data requirements without compromising on quality and process efficiency is challenging. Moreover, additional space is required to store and manage such humungous volumes of data. This is challenging for many organizations.

IV. Data Privacy and Security

Annotating sensitive data, such as personally identifiable information (PII), financial data, and medical records, raises privacy and security concerns. Any loophole in privacy policy can put the data at risk, exposing it to thefts, breaches, leaks, and other such vulnerabilities. Non-compliance with regulations and standards also leads to reputational damage, fines, and lawsuits.

V. Annotator Bias

The data annotation process can be highly subjective at times. An annotator’s bad biases and prejudices can lead to inconsistent and skewed annotations. This creeps into the AI/ML model, impacting its performance and reliability.

These data annotation challenges often lead to the failure of an AI/ML model. This, in turn, has detrimental consequences on businesses, some of which are discussed below.

Explore the Role of Gen AI in Enhancing Data Annotation Efficiency

Impact of Annotation Challenges on Businesses

A. Increased Operational Expenditure

Data annotation is a taxing exercise. It requires dedicated time and effort to be executed efficiently. Specialized tools and expertise are required to perform these tasks accurately, incurring significant costs. The costs might skyrocket for companies with large-scale and complex AI/ML development projects. All these efforts and resources are wasted when the project fails. No organization can afford such losses in terms of monetary and business opportunities!

B. Slow Model Development

Inconsistent data quality hampers the AI/ML model training process and delays the pace of innovation. This is detrimental for companies operating in industries that are volatile and experience heavy fluctuations. For instance, technology is one sector where prompt project delivery is important. In fact, a company, irrespective of industry vertical or domain, having a unique and competitive product in the pipeline, cannot afford slow model development.

C. Delayed Project Timelines

A substantial amount of time is spent correcting inaccurate and biased annotations, which delays some project timelines. Such delays pose significant problems, especially for organizations looking to reap the first-mover advantages and capture the market. Consider the example of automakers that develop machine learning models for autonomous vehicles, where such setbacks mean lost revenues. Take another case of a facial recognition system built for security and surveillance purposes. Any prejudices in such systems can widen the already existing societal gaps.

Addressing these challenges requires a thoroughly sought-after data annotation strategy with efficient methods and iterative feedback loops. This can be achieved through an in-house data annotation team or outsourcing to a professional provider. Picking the right option out of the two can be surprisingly complex as both these approaches have pros and cons.

In-House vs. Outsourced Data Annotation

- In-House Data Annotation

Most organizations prefer having an internal data annotation team as it offers direct control over the process. Data remains safe and secure as it isn’t shared with any third-party provider or transferred online. Internal resources ensure annotations are aligned with business-specific goals and use cases as they better understand the organization’s core values.

However, this approach involves significant upfront investments in terms of hiring professionals, procuring software, and maintaining hardware. The turnaround time is usually slower due to the time required for initial project setup. This is a downside for projects requiring quick turnaround times.

At the same time, in-house data annotation is suitable when the ML project involves large sets of sensitive information. Organizations can be assured the project is carried out safely by following the highest data privacy and security standards. Moreover, scalability and flexibility are limited due to operational issues.

- Data Annotation Outsourcing

Outsourcing offers access to diversely skilled and experienced annotators, subject matter experts, and data professionals. These specialists know what it takes to annotate datasets accurately and work accordingly. They are equipped with the latest data annotation tools and techniques. They follow the industry’s best practices to ensure the highest quality of training datasets. The professionals can efficiently annotate any variety and volumes of data without compromising quality.

Annotators use the right blend of skills, experience, and expertise to deliver consistent and coherent training datasets within the stipulated time and budget. Moreover, an experienced data annotation company offers inherent scalability and flexibility to cater to the project’s unique requirements. It is the best bet for organizations looking for cost-effective and high-quality training datasets for their AI/ML models.

The professionals implement data security protocols such as authorized access and encryption to safeguard sensitive business information and maintain its integrity. They also adhere to relevant data regulations and industry standards, such as GDPR, CCPA, HIPAA, etc., to ensure compliance.

Factor In-House Outsourcing Scalability Limited Inherent scalability Pricing Significant upfront investments Affordable due to pay-as-you-go model Management Diverts resources from core competencies Allows internal resources to focus on core competencies Security Higher security Assured security Turnaround Time Generally slow Quicker Control Direct control over the process Shared control over the process Despite the significant advantages of outsourced data annotation, many organizations still prefer having an in-house team. That’s because having an internal setup helps with cost-cutting and accomplishing AI development projects on a reasonable budget. The reality is otherwise as that’s when expenses start to accumulate. Stakeholders should consider other factors that eventually cost them more. This choice leads to losses, including the cost of correcting dataset inconsistencies and inaccuracies and scaling up resources to meet evolving business needs. This delays project timelines and reduces time-to-market. Instead, following certain best practices can help businesses balance cost and quality when outsourcing data annotation projects.

Best Practices for Balancing Cost and Quality

Companies should adopt the following best practices to maximize the benefits of outsourcing while ensuring high-quality annotations. Take a look:

1. Choosing the Right Outsourcing Partner

Choosing the right data annotation outsourcing partner is an uphill task. Organizations can evaluate a service provider based on their experience, technology used, scalability, turnaround time, pricing model, quality control mechanisms, and security protocols. Providers with industry-specific annotation expertise should be preferred for projects like medical data labeling.

2. Implementing a Hybrid Approach

A hybrid model combines in-house quality assurance with outsourced annotation. Professionals perform the time-consuming and resource-intensive task of data labeling within the stipulated time and budget, while in-house teams ensure data quality. Simply put, organizations can use internal teams to validate vendor work, ensuring that outsourced annotations meet expected standards.

3. Defining Clear Annotation Guidelines

Annotation is a highly subjective task. Organizations must define business goals, the model’s intended use case, and annotator guidelines to avoid annotator bias and subjectivity. Providing vendors with well-documented annotation guidelines minimizes interpretation errors. Additionally, sample annotations and continuous feedback loops help improve annotation accuracy and consistency.

4. Using AI-Assisted Annotation

Many data annotation companies integrate AI-powered pre-annotation tools. These tools assist human annotators in reducing errors and increasing efficiency. Organizations can collaborate with service providers that offer AI-augmented workflows for better results.

5. Conducting Regular Audits and Quality Checks

Organizations should conduct periodic audits and establish key performance indicators (KPIs) to monitor vendor performance. Metrics such as annotation accuracy, turnaround time, and inter-annotator agreement scores help assess quality.

Bottom Line

Outsourcing data annotation services offers significant cost benefits, including reduced labor expenses, lower infrastructure costs, and faster turnaround times. However, organizations must carefully manage quality considerations, as annotation accuracy directly impacts AI model performance.

By selecting the right service provider, defining strict quality guidelines, and adopting a hybrid approach, businesses can achieve the optimal balance between cost savings and high-quality annotations. As AI-driven industries continue to grow, businesses that strategically outsource data annotation will gain a competitive edge in developing robust and accurate machine learning models.