Imagine you’re trying to solve a puzzle, but some of the pieces don’t fit right. That’s what it’s like when you work with data that hasn’t been cleaned. Data cleansing is the process of fixing errors, removing duplicates, and making sure all the information is consistent and accurate. It’s a foundational step in preparing data for training AI/ML models or making business decisions.

But what happens if you skip this step? Poor-quality data can lead to bad decisions and incorrect conclusions. For example, if you’re training an AI model to predict customer behavior but your data has duplicate records, outdated information, or irrelevant entries, the model may render biased outputs.

Data cleansing helps you avoid these problems. It ensures that your data is ready to use, and your AI models perform well. With the right tools and techniques, you can efficiently clean your data and get the most out of it. This detailed post will walk you through the proven tools and practices for data cleansing. It also delves into the common mistakes that you need to avoid during data cleansing.

10 Popular Data Cleansing Tools for High-Quality Training Data

Take a deep dive to explore the curated list of data cleansing solutions that help ensure high-quality training datasets for AI and ML projects.

1. OpenRefine

OpenRefine is a free, open-source data cleansing tool that helps clean and transform messy data. It allows users to explore large datasets, remove duplicates, and transform data into different formats. It is ideal for both beginners and experts but requires some technical knowledge for complex tasks.

Key Features of OpenRefine

- Handles large datasets with various formats (CSV, TSV, Excel, etc.).

- Clustering feature for grouping similar data and fixing inconsistencies

- Tracks all cleaning steps with an undo/redo option

- Allows data transformation and reconciliation with external sources

- Supports both local and web-based data processing

| Pros of OpenRefine | Cons of OpenRefine |

|---|---|

| Clustering feature | Requires technical knowledge |

| Secure local data processing | Limited database integration |

| Supports data transformation | Outdated interface |

| User-friendly interface | Slower performance with large datasets |

| Undo/Redo functionality | Learning curve for beginners |

2. Trifacta Wrangler

Trifacta Wrangler uses machine learning to clean and transform data efficiently. It suggests transformations to improve data quality and offers tools for creating visual pipelines.

Key Features of Trifacta Wrangler

- Machine learning suggests data-cleaning transformations

- Visual interface for creating workflows and pipelines

- Real-time monitoring of data quality during processing

- Cloud-based tools with collaboration features

- Supports integration with multiple data sources

- Offers automation for repetitive cleaning tasks

| Pros of Trifacta Wrangler | Cons of Trifacta Wrangler |

|---|---|

| Machine learning-based automation | Expensive for small businesses |

| Intuitive visual interface | Limited free version |

| Visualization support | Requires training for advanced use |

| Free desktop version | Not ideal for massive datasets |

| Simplifies data preparation | Subscription required |

3. Winpure Clean & Match

Winpure Clean & Match specializes in cleaning business and customer data. It features fuzzy matching, deduplication, and rule-based cleaning, making it suitable for CRM systems.

Key Features of Winpure Clean & Match

- Fuzzy matching for identifying and fixing typos or duplicates

- Rule-based cleaning for custom data transformations

- Multi-language support for global datasets

- Integration with CRM systems like Salesforce

- Batch processing for handling large datasets

- Simple user interface for non-technical users

| Pros of Winpure Clean & Match | Cons of Winpure Clean & Match |

|---|---|

| Easy-to-use interface | Not suitable for large datasets |

| Affordable pricing | Limited advanced features |

| Eliminates duplicates | No cloud-based version |

| User-friendly interface | Limited scalability |

| Compliance with GDPR | Basic reporting tools |

4. TIBCO Clarity

TIBCO Clarity is a cloud-based data cleansing tool offering ETL capabilities, deduplication, and data profiling. It supports batch processing and integrates with various file formats and online repositories.

Key Features of TIBCO Clarity

- ETL (Extract, Transform, Load) capabilities for complex tasks

- Deduplication tools to remove redundant entries

- Batch processing to handle large datasets efficiently

- Integration with diverse file formats and databases

- Undo feature to reverse cleaning actions if needed

- Cloud-based platform for easy accessibility

| Pros of TIBCO Clarity | Cons of TIBCO Clarity |

|---|---|

| Supports batch processing | Subscription required |

| Cloud-based convenience | Complex interface for beginners |

| Handles diverse file formats | No free version is available |

| ETL functionality | Requires training |

| Undo transformation feature | Limited offline functionality |

5. Melissa Clean Suite

Melissa Clean Suite focuses on real-time data cleaning for CRM systems. It verifies and corrects data during collection, ensuring high-quality inputs.

Key Features of Melissa Clean Suite

- Real-time data validation during collection or entry

- Autocomplete and verification tools for addresses and names

- Batch processing support for bulk cleaning tasks

- Seamless integration with CRM systems like Salesforce/Dynamics

- Marketing segmentation tools to organize customer data effectively

- Ensures compliance with postal standards

| Pros of Melissa Clean Suite | Cons of Melissa Clean Suite |

|---|---|

| Real-time data cleaning | Limited to CRM platforms |

| Improves data accuracy | Less versatile for general tasks |

| Handles diverse file formats | No free version is available |

| Data enrichment | No free version |

| Boosts email deliverability | High cost for small businesses |

6. IBM Infosphere Quality Stage

Melissa Clean Suite focuses on real-time data cleaning for CRM systems. It verifies and corrects data during collection, ensuring high-quality inputs.

Key Features of IBM Infosphere Quality Stage

- Over 200 pre-built quality rules to automate common cleaning tasks

- Data profiling to understand patterns and inconsistencies in datasets

- Supports governance processes like master data management

- Cloud or on-premise deployment options available

- Scoring system to measure data integrity and quality improvements

- Handles large-scale enterprise-level datasets

| Pros of IBM Infosphere Quality Stage | Cons of IBM Infosphere Quality Stage |

|---|---|

| Ideal for big data projects | Expensive subscription costs |

| Supports ETL workloads | Requires technical expertise |

| Enhances decision-makings | Steep learning curve |

| Comprehensive profiling tools | Resource-intensive |

| Improves data quality | Not beginner-friendly |

7. Talend Open Studio

It is an open-source data cleansing tool offering a graphical interface for cleaning, transforming, and enriching datasets across multiple sources.

Key Features of Talend Open Studio

- Graphical interface for designing workflows without coding skills

- Open-source tool with flexibility for customization

- Supports integration with multiple databases and systems

- Handles complex data transformations effectively

- Provides advanced options like deduplication and standardization

- Allows automation of repetitive cleaning processes

| Pros of Talend Open Studio | Cons of Talend Open Studio |

|---|---|

| Free and open-source | High memory requirements |

| Powerful integration capabilities | Steep learning curve |

| Offers advanced cleaning options | Resource-intensive |

| Graphical workflow design | Requires technical knowledge |

| Flexible customization options | Limited support for non-tech users |

8. DataCleaner

DataCleaner is a free tool focused on profiling to understand the structure of datasets. It helps users identify errors, remove duplicates, and standardize data formats. The simpler UI of this tool makes it suitable for both technical and non-technical users.

Key Features of DataCleaner

- Removes duplicate records for cleaner datasets

- Profiling capabilities to detect errors in datasets

- Standardization features to ensure uniformity across fields

- Integration with various file formats and databases

- Tracks data quality over time to maintain consistency

- User-friendly interface suitable for beginners

- Free tool with basic functionalities for small-scale projects

| Pros of DataCleaner | Cons of DataCleaner |

|---|---|

| Free to use | Advanced features need expertise |

| Simple and user-friendly | Limited scalability |

| Deduplication tools included | Basic reporting features |

| Works well with diverse data sources | No cloud-based version |

| Identifies data quality issues | Not ideal for big data projects |

9. Pandas (Python Library)

Pandas is a Python library widely used in scripting-based data manipulation and cleaning tasks.

Key Features of Pandas (Python Library)

- Automates cleaning through reusable scripts

- Handles missing values, duplicates, and inconsistent formats

- Ideal for small to medium-sized datasets

- Support advanced options like deduplication

- Offers powerful tools for slicing, filtering, and transforming data

| Pros of Pandas | Cons of Pandas |

|---|---|

| Free and open-source in nature | Steep learning curve |

| Highly flexible | Requires programming knowledge |

| Automates repetitive tasks | Performance issues with large datasets |

| Integrates well with other Python libraries | No graphical user interface |

| Ideal for scripting-based tasks | Limited scalability |

10. Data Ladder

Data Ladder is a popular data cleansing tool for data quality and cleansing. It is engineered to help businesses clean, match, and transform their data. The tool is widely used for deduplication, profiling, and enrichment of datasets.

Key Features of Data Ladder

- Provides insights into data structure and patterns

- Automates removal of duplicate records using advanced algorithms

- Identifies similarities across datasets without unique identifiers

- Ensures consistency in address formats using USPS databases

- Combines datasets while avoiding data loss

| Pros of Data Ladder | Cons of Data Ladder |

|---|---|

| Easy-to-use interface | Subscription required |

| High accuracy in matching | Limited governance features |

| Supports multiple data formats | Not ideal for very large datasets |

| Automates deduplication | Requires technical setup |

| Integrates with modern systems | Lacks advanced analytics tools |

Strategies for Improving Data Quality Through Data Cleansing Services

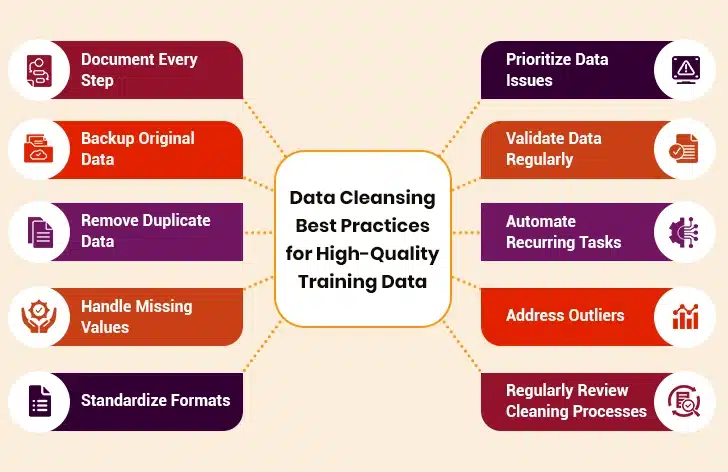

Data Cleansing Best Practices for High-Quality Training Data

Here are the best data cleansing techniques that need to be followed religiously to achieve high-quality data for training AI and ML models.

I. Document Every Step

Maintain detailed records of the data cleaning process. This may include identified issues, corrections applied, and assumptions made. Documenting every step ensures transparency and allows future replication of the cleaning process.

Example: While cleaning a customer database, document steps like removing duplicates and fixing typos.

II. Backup Original Data

Always keep a copy of the raw dataset before starting the cleaning process. This helps compare cleaned data with the original to avoid losing valuable information.

Example: Save a backup of raw sales data before removing outliers or irrelevant entries.

III. Remove Duplicate Data

Duplicates can distort analysis and waste storage space. Identify and remove duplicates using tools to streamline datasets.

Example: Use fuzzy matching to find similar customer records like “John Doe” and “Jon Doe.”

IV. Handle Missing Values

Missing values can impact data accuracy. Replace them with appropriate estimates, averages, or placeholders, or remove rows with excessive missing data.

Example: Fill missing age values in a survey dataset with the average age of respondents.

V. Standardize Formats

Ensure consistency in formats across datasets, such as date formats, currency symbols, or text cases. This avoids confusion during analysis.

Example: Convert all dates in a similar format like “YYYY-MM-DD.”

VI. Prioritize Data Issues

Focus on fixing critical errors first, such as incorrect values or missing entries that significantly impact analysis results.

Example: In a financial dataset, prioritize correcting transaction amounts over formatting inconsistencies.

VII. Validate Data Regularly

Set validation rules to check for errors or discrepancies during data entry or processing. This helps maintain consistent quality over time.

Example: Use validation scripts to flag negative sales figures in monthly reports.

VIII. Automate Recurring Tasks

Use tools and scripts to automate tasks like deduplication and typo correction.

Example: Use Python scripts like NumPy to clean large datasets by automating duplicate removal and typo fixes.

IX. Address Outliers

Outliers can skew results and misrepresent trends. Identify extreme values using statistical methods and decide whether to transform or remove them.

Example: Detect unusually high sales figures using boxplots and investigate their validity.

X. Regularly Review Cleaning Processes

Data cleaning is ongoing; periodically review processes to adapt to new issues or requirements as datasets grow or change.

Example: Update cleaning rules for customer data when new fields like “social media handles” are added to the database.

Data Cleansing: The Backbone of Predictive Modeling

Common Mistakes to Avoid in Data Cleansing

It is strongly recommended to avoid common data cleansing mistakes to have accurate and reliable data. Listed below are the mistakes that need to be avoided when cleaning datasets.

1. Ignoring Duplicate Data

Duplicate entries can distort analysis and lead to inaccurate results. Failing to identify and remove duplicate waste storage creates confusion.

2. Inconsistent Data Formatting

Inconsistent formats, such as varying date styles or numerical scales, can hinder analysis and lead to misleading insights.

3. Neglecting Missing Values

Overlooking missing values can compromise data quality. It’s important to address them by imputing values or removing affected rows.

4. Failing to Validate Data

Skipping validation steps can result in using inaccurate or incomplete data, which compromises the reliability of outcomes.

5. Neglecting Text Data Cleaning

Text data often contains spelling variations, special characters, or inconsistent capitalization, which can interfere with analysis.

6. Overlooking Outliers

Outliers can skew results and misrepresent trends. Failing to address them may lead to flawed conclusions.

7. Skipping Standardization

Failing to standardize data formats across datasets can cause compatibility issues during integration or analysis.

8. Not Backing Up Original Data

Starting the cleaning process without backing up raw data increases the risk of losing valuable information during edits.

9. Cleaning Without a Plan

Jumping into data cleansing without identifying issues or setting priorities wastes time and may overlook critical errors.

10. Over-Cleaning Data

Excessive cleaning can remove useful information or oversimplify datasets, reducing their value for analysis.

Summing Up

Data cleansing is a crucial step in preparing high-quality training data. It helps remove errors, inconsistencies, and duplicates, which are essential for high-quality training data. If you also want to cleanse your data to improve the accuracy of your AI & ML models, you may use appropriate tools and follow the right data cleansing methodology discussed above in this detailed post.