Businesses are always looking for newer ways to implement data analytics projects to help them make better decisions in today’s data-driven world. The worldwide market for data analytics, according to Fortune Business Insights, is expected to develop at a compound annual growth rate (CAGR) of 13% to reach $924.39 billion by 2032. Last year, more and more businesses reported that their investments in data and analytics have produced quantifiable returns. In addition, compared to the previous five years, the percentage of companies that have developed a data and analytics culture has more than doubled this year, rising from 20.6% to 42.6%, as per the 2024 Data and AI Leadership Executive Survey.

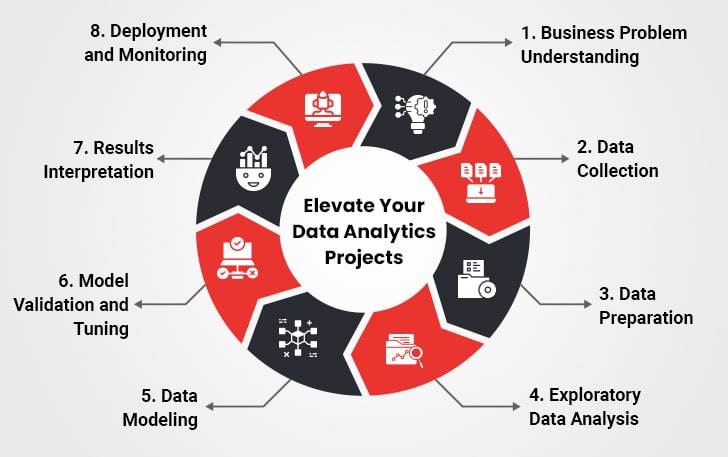

Understanding the data analytics lifecycle is crucial for any organization that relies on data-driven decision-making. This blog offers an elaborate, step-by-step guide on how to effectively manage the eight essential stages of a data analytics project. By mastering these stages, companies can boost their analytical capabilities, expect better business outcomes, and stay competitive in today’s data-centric world.

1. Business Problem Understanding

A thorough grasp of the business challenge or goal is the first step in any effective data analytics project. This process entails determining the most important problems that require attention and setting quantifiable objectives. For example, a company may wish to increase operational efficiency, improve sales forecasting, or lower customer attrition. The project will remain focused and in line with corporate goals if the problem is well defined.

Example Scenarios

- Customer Churn Analysis: Organizations can find trends that lead to customer attrition and create retention plans by examining customer behavior and transaction history.

- Sales Forecasting: Businesses may more efficiently plan their marketing, staffing, and inventories when they have accurate sales forecasts.

- Operational Efficiency: By identifying process bottlenecks, data analytics can provide enhancements to optimize operations.

Stakeholder consultations are another common method for comprehending the business challenge in order to obtain a range of viewpoints and further hone project goals. This collaboration ensures that the analytics approach aligns with broader organizational goals.

2. Data Collection

The collection of data comes next after the business challenge has been identified. Market studies, social media sites, corporate databases, and outside vendors are just a few of the places where data may be found. Since the accuracy of analysis greatly depends on the input, it is imperative to ensure the accuracy and relevance of the data.

Sources of Data

- Internal Databases: CRM systems, sales records, and customer databases

- External Sources: Social media, public datasets, and industry reports

- Third-Party Vendors: Specialized datasets tailored to specific needs

Methods of Data Collection

- Surveys: Getting firsthand responses from clients or stakeholders.

- Web scraping: Employing automated tools to extract data from websites.

- APIs: Using Application Programming Interfaces to get data from external platforms

- Internet of Things-based Gadgets: Gathering information from linked sensors and gadgets.

Transparency and reproducibility in subsequent analyses are ensured by appropriately documenting data sources and procedures. To guarantee compliance and sustain stakeholder trust, businesses should also set up data governance procedures.

3. Data Preparation

To ensure that the acquired data is ready for analysis, data preparation includes cleaning and preparation of data. This stage entails addressing outliers, resolving missing values, and converting data into formats that can be used.

Steps in Data Preparation

- Data Cleaning: Remove duplicates, handle missing values, and correct errors

- Data Transformation: Normalize and encode categorical variables; scale numerical features

- Analytic Sandbox Creation: Provide a safe space for testing

A strong data analytics pipeline is built on well-prepared data, which allows for more precise outcomes.

Regular audits of data sources and preparation methods are advised to further improve data quality. These audits can reveal inconsistencies early and prevent downstream issues that compromise analysis integrity.

4. Exploratory Data Analysis

Exploratory Data Analysis (EDA) helps summarize data characteristics, often through visualization. This phase identifies trends, patterns, and anomalies that guide the next steps in the data analytics lifecycle.

Techniques in EDA

- Visualization: Examine data distributions using box plots, scatter plots, and histograms.

- Statistical Analysis: To comprehend variability, compute the mean, mode, median, and standard deviation.

- Pattern Recognition: Detect correlations and trends that inform further analysis.

EDA also provides an opportunity to confirm data assumptions, ensuring alignment with corporate objectives. Deeper insights can be revealed by including sophisticated visualizations, like heatmaps or 3D scatter plots.

5. Data Modeling

Data modeling involves selecting algorithms and building models based on the problem type. For instance, classification models handle categorical data, whereas regression models forecast continuous variables. To avoid overfitting, the dataset must be divided into subgroups for testing, validation, and training.

Model Types

- Regression Models: Used to forecast sales revenue and other indicators.

- Classification Models: Used for results such as client attrition.

- Clustering Models: Used to divide up data, like client demographics.

Model Evaluation Metrics

- Accuracy: The percentage of cases that are accurately classified

- Precision: The ratio of true positives to all expected positives is known as precision

- Recall: The proportion of real positives to true positives

- F1 Score: The balance between recall and precision

- ROC-AUC: The measurement of trade-offs between true and false positive rates.

Model interpretability and explainability are increasingly important to ensure stakeholder trust in results. By demystifying model predictions, tools such as SHAP or LIME can increase confidence in analytical results.

6. Model Validation and Tuning

After building the model, it’s essential to validate and fine-tune data model for optimal performance. This ensures the model’s robustness and applicability to real-world scenarios.

Techniques for Validation and Tuning

- Cross-validation: Apply stratified k-fold or k-fold techniques.

- Hyperparameter Tuning: Use Bayesian optimization, grid search, or random search to optimize model parameters

- Performance Monitoring: Evaluate the model’s correctness on a regular basis and make any required updates.

A clear road map for upcoming iterations is provided by documenting model modifications made during tuning. Including cross-functional teams in the validation process can also help develop the model by bringing in new viewpoints.

Documentation of model changes during tuning provides a clear roadmap for future iterations. Engaging cross-functional teams during validation can also bring fresh perspectives to model refinement.

7. Results Interpretation

Interpreting results translates model outputs into actionable insights. Present findings to stakeholders through clear dashboards, reports, or presentations, ensuring they’re both comprehensible and actionable.

Techniques of Communication

- Dashboards: Visual summaries that are interactive for instant insights

- Reports: In-depth documentation that describe procedures and results

- Presentations: Formats that showcase beneficial insights to guide decisions

Adapt communication formats to the technical proficiency and decision-making role of the audience to increase engagement. Incorporating real-world situations where insights might lead to significant decisions will better connect with stakeholders.

8. Deployment and Monitoring

Deploying the model into production involves integrating it with existing systems and ensuring its performance remains consistent over time. Real-time monitoring is critical for detecting issues like data drift or performance degradation.

Path to Deployment

- Infrastructure Setup: Acquire pipelines and computational resources

- Integration: Incorporate models into processes with efficiently

- Monitoring: Employ real-time analytics to keep an eye on performance indicators and ensure correctness

Downtime can be greatly decreased by developing automated alert systems for performance deviations. The model will adapt to shifting business conditions if feedback loops are established for ongoing learning.

Challenges in the Data Analytics Lifecycle

| Data Quality Issues | Low-quality data leads to inaccurate results. This is lessened by routine validation and cleaning. |

| Resource Constraints | It is crucial to have enough compute power and qualified staff. |

| Complexity in Integration | Significant modifications to current systems may be necessary for advanced models. |

Many of these issues can be successfully resolved by making investments in scalable infrastructure. For instance, using cloud solutions can give you the flexibility you need to handle computational needs.

Best Practices for Projects Using Data Analytics

I. Adopt Agile Methodologies: To improve models and adjust to new information, employ iterative techniques

II. Give Stakeholder Alignment a Priority: To sustain alignment, share goals and achievements on a regular basis

III. Stress Ethical Data Use: Comply with legal requirements and protect privacy of data

IV. Put Training First: Give teams the tools they need to efficiently handle and analyze data

Encouraging a culture of continuous learning within the team can also enhance overall project efficiency.

Wrapping Up

Understanding and applying the data analytics lifecycle is vital for businesses aiming to thrive in a data-centric landscape. Each phase—from defining the problem to deployment—is integral to delivering actionable insights and measurable value. Organizations may confidently drive innovation and improve their data analytics practice projects by grasping this lifecycle. This guide gives you the skills you need to be successful in 2025 and beyond, whether you’re developing projects for data analysts or streamlining a data analytics pipeline. If you’re planning to invest in a industry-specific data analytics project, you may reach out to an analytics services partner that’s reliable and has proven experience.