AI is evolving fast—but it’s hitting limits. Not because models aren’t powerful enough, but because the data feeding them isn’t keeping up. Whether you’re training a chatbot, building a recommendation system, or deploying a self-driving car, the model is only as good as the data behind it.

Getting AI-ready data isn’t simple though. Your systems need to process information in under 200 milliseconds to keep customer interactions flowing in real-time. Poor quality data doesn’t just produce wrong results – it drives up maintenance costs too. When training AI models, you can never have too much data, but that data must meet strict quality benchmarks.

Table of Contents

What Makes AI Truly Scalable Across Your Business?

Why Data Collection Stands Between You and Scalable AI

Enters Automated Data Collection

Benefits of Automated Data Collection

Tools and Techniques for Automated Data Collection

What Makes AI Truly Scalable Across Your Business?

Scalable AI isn’t just about handling bigger datasets. It’s about AI systems working effectively at the size, speed, and complexity your business needs. True scalability means your AI technologies adapt and grow across your entire organization without losing performance, reliability, or accuracy.

The path to scalable AI starts with isolated projects and moves toward organization-wide implementation. This creates an interconnected fabric of AI-driven operations that changes how businesses function at their core.

When we talk about scalable systems, three essential characteristics typically stand out:

- 1. Administrative scalability – Maintaining control even when numerous organizations use the system

- 2. Geographic scalability – Working effectively regardless of distance between users and resources

- 3. Load scalability – Improving performance based on available computing resources, either by adding more machines (horizontal scaling) or increasing a machine’s capacity (vertical scaling)

Scalable AI demands a complete approach to enterprise transformation. This means bringing together quality data from different business units, building robust infrastructure, and preparing your workforce to understand and act on AI outputs. As your AI implementation grows, the risks and complexities grow too, making governance and security more important than ever.

AI data collection services have become essential in this ecosystem. Scalable AI depends on comprehensive, high-quality data integration. AI data collection companies provide the infrastructure needed to maintain the speed and scale for AI systems to perform effectively in real-world situations. Without specialized data collection expertise, organizations struggle to gather enough data to train models that can scale across various business functions.

Why Data Collection Stands Between You and Scalable AI

Despite major advances in AI algorithms and computing power, data collection remains the biggest roadblock preventing businesses from achieving truly scalable AI. The numbers tell a troubling story: up to 87% of AI projects never even reach production, with poor data quality standing out as the main culprit.

Did you know data scientists spend between 60-80% of their valuable time just cleaning data instead of developing models? This preparation bottleneck turns what should be week-long projects into month-long slogs, causing major delays in AI deployment and scalability.

The financial consequences are serious. Organizations lose an average of $12.90 million annually due to data inefficiencies. Additionally, poor data quality costs businesses approximately $3.10 trillion annually through direct losses, missed opportunities, and cleanup efforts.

For AI systems to scale properly, they need massive amounts of high-quality, diverse, and well-labeled data. Yet collecting such data presents several tough challenges:

- I. Data Quality Issues: Incomplete, inconsistent, or noisy data ruins model accuracy. As industry experts often say, “In AI there’s a saying: Garbage in, garbage out. If the data that is fed into AI models is not good, the outcomes won’t be dazzling either”.

- II. Integration Challenges: Most businesses struggle with data silos across different departments, teams, and systems. These silos create incomplete insights and require manual, fragile integration processes that overburden data teams.

- III. Privacy and Regulatory Hurdles: As governments implement stricter data protection regulations like GDPR and CCPA, businesses face growing limitations on how they can collect and use data. This creates tension between AI’s need for vast amounts of information and legal requirements to limit data collection.

- IV. Scalability Constraints: As data volumes grow exponentially, especially with IoT devices generating unprecedented amounts of information, traditional data collection and quality management methods simply can’t keep up. Scaling these methods becomes prohibitively expensive and resource intensive.

- Real-time Requirements: Today’s AI applications demand data processing in near real-time, creating additional challenges for data collection infrastructure.

The very nature of machine learning techniques requires ingesting massive amounts of data for training and testing algorithms. These requirements directly conflict with data collection limitation principles, creating fundamental tensions in AI development.

Enters Automated Data Collection

What’s the solution to these data collection bottlenecks? Automation. Businesses are increasingly turning to automated approaches for scalable AI implementations. Automated data collection uses technology to gather, process, and analyze information efficiently without human intervention. This approach now forms the backbone of modern business intelligence—working as a digital workforce that operates with precision human teams simply cannot match.

How do you implement automated data collection? You have several options—programmatic methods using languages like Python, no-code platforms like Zapier, or hybrid approaches combining both. AI itself enhances the collection process through techniques like active learning, where the model improves over time by learning from its own annotations and receiving feedback.

That’s why leading AI data collection companies now offer solutions that integrate these capabilities, helping businesses overcome the bottlenecks we discussed earlier. These systems continuously learn, minimizing workload while improving training data quality.

Gain Incremental Efficiencies with Automated Data Collection

Benefits of Automated Data Collection

Automated data collection doesn’t just solve problems—it creates remarkable advantages for businesses implementing AI systems. When companies integrate automation into their data pipelines, they experience substantial improvements across multiple aspects of their operations.

1. Speed

Manual data collection simply can’t keep pace with today’s business demands. Automated data collection accelerates the entire data lifecycle dramatically. Businesses implementing end-to-end automated data pipelines achieve time savings of 80-90% for specific use cases. This speed enables real-time processing at sub-200ms speeds across the data supply chain, giving teams the ability to make faster decisions based on current information.

2. Scale

The most compelling advantage of automated collection? Scalability. Unlike manual methods that require proportional increases in workforce, automated systems expand effortlessly to handle growing data volumes. This scalability becomes invaluable as your business grows, allowing you to gather information on thousands of companies simultaneously without adding staff.

Automated tools don’t discriminate between data types either—they process both structured and unstructured data seamlessly. This versatility means you’re not limited by data format when expanding your AI capabilities.

3. Cost

Implementing automated systems requires upfront investment—but don’t let that deter you. The long-term financial benefits far outweigh the initial costs. Research shows automated approaches reduce expenses through decreased labor requirements and improved operational efficiency.

4. Consistency

Human error plagues manual data collection efforts. Automated data collection eliminates this problem, ensuring uniformity across datasets that manual processes simply cannot match. By implementing automated cleansing, businesses establish reliable workflows that maintain data quality standards.

This consistency supports data uniformity, making analysis and interpretation significantly easier for AI models. The end result? More reliable and transparent AI outcomes that stakeholders can trust for decision-making.

5. Adaptability

Modern AI-powered collection systems don’t just work—they learn and improve continuously. These systems adapt to changing business needs, helping your organization remain agile in competitive markets. Through techniques like self-supervised learning and feedback loops, automated systems progressively enhance their accuracy over time.

This adaptability becomes crucial as data requirements evolve, enabling your business to respond quickly to emerging issues without complete system overhauls. Your data collection capabilities grow smarter just when you need them most.

Ready to Scale Your AI projects with Quality Data?

Tools and Techniques for Automated Data Collection

Need the right tools to make automated data collection work? Let’s explore the essential technologies businesses use to build efficient data pipelines that fuel scalable AI implementations.

I. APIs and Web Scraping Bots

APIs act as critical bridges between applications and data networks, enabling seamless communication through standardized protocols. Research shows APIs make big data collection possible by providing standardized ways for different software applications to exchange information. Most businesses use APIs for four main purposes: accessing structured data, integrating diverse sources, enabling continuous data ingestion, and programmatically querying databases.

What about websites without APIs? That’s where web scraping comes in. This technique extracts information from HTML-coded websites, collecting large volumes of usable data. Web scraping proves invaluable for gathering data from sites without accessible APIs, performing real-time extraction, and accessing unstructured content. Businesses regularly use web scraping for building training datasets, conducting market research, monitoring competitor prices, and extracting financial data.

II. Synthetic Data Generation

Sometimes you simply don’t have enough real data. Synthetic data—artificially generated information that mimics real-world data—offers a powerful solution when authentic data is limited or unavailable. Created through computational methods like statistical distribution modeling, model-based approaches, or advanced techniques such as Generative Adversarial Networks (GANs), synthetic data allows businesses to produce information on demand at virtually unlimited scale.

III. Self-Supervised Learning and Feedback Loops

Self-supervised learning transforms unsupervised problems into supervised ones by automatically generating labels from the data itself. This clever approach minimizes dependency on labeled datasets through techniques like autoencoding, autoregression, and masking. The efficiency gain is substantial—models pre-trained with SSL typically require only a fraction of labeled data needed for traditional supervised learning.

IV. Human-in-the-Loop Systems

Even the best automation needs human oversight. Human-in-the-loop (HITL) systems integrate human judgment into automated processes at critical decision points. Beyond just improving accuracy, HITL provides essential ethical oversight, bias mitigation, and adaptability to new situations. Businesses implementing human-in-the-loop data management consistently report higher confidence in driving better outcomes from their AI investments.

V. ML Ops Frameworks

How do you manage your machine learning pipelines effectively? MLOps frameworks automate the entire machine learning lifecycle, from initial data validation to final model deployment. These systems establish continuous training pipelines that can be triggered on demand, on schedules, when new data becomes available, or when model performance begins to degrade. Effective MLOps includes tracking experiment outcomes, managing different model versions, and continuously monitoring real-world performance.

Ethical and Practical Challenges

Implementing automated data collection isn’t just about technology—it introduces complex ethical dilemmas alongside technical hurdles. Businesses must navigate these challenges thoughtfully to build sustainable AI systems.

Data bias remains a fundamental concern in automated collection systems. Without active intervention, algorithms reflect the biases present in their training datasets, potentially creating incomplete or skewed representations of available evidence. This issue becomes particularly problematic given existing publication bias toward English-speaking and high-income countries. Even when using active learning, automated tools inherit the biases of the human reviewers who supervised their training.

Do you think there’s a perfect tool for automating data collection? Unfortunately, there’s rarely a “silver bullet” capable of handling an entire data collection process from start to finish. Instead, businesses must combine multiple software types that often don’t integrate seamlessly, complicating workflows and increasing overhead.

Trust and transparency create additional roadblocks. Concerns about tool performance—objectiveness, accuracy, reproducibility—and methodological transparency cause stakeholders to hesitate. Many business leaders express uncertainty about automation’s limitations and decision points.

Several practical hurdles compound these ethical concerns:

- Resource constraints hit smaller organizations hardest, as they often lack staff with sufficient time for training or specialized IT skills

- Initial implementation demands significant upfront investment before realizing returns

- Organizational resistance to change and cultural shifts can undermine successful adoption

- Integration of data from various sources with different formats creates complex technical challenges

Throughout implementation, AI data collection companies must balance privacy with utility—a core ethical tension. Facial recognition and other biometric data collection raise serious privacy implications, making robust governance frameworks essential.

The regulatory landscape continues evolving rapidly too. The EU’s AI Act, for instance, classifies algorithmic systems by risk level, prohibiting those deemed “unacceptable” while imposing strict obligations on “high-risk” systems. Such regulations directly impact how AI data collection services operate across jurisdictions.

That’s why navigating these challenges requires businesses to prioritize ethical frameworks alongside technical implementations. This ensures AI data collection serves beneficial purposes without compromising fundamental values or regulations.

Closing Thoughts

Automated data collection stands as the foundation for businesses seeking to scale their AI implementations effectively. Though technical challenges and ethical considerations demand careful attention, automated systems deliver clear advantages through faster processing, consistent results, and significant cost savings.

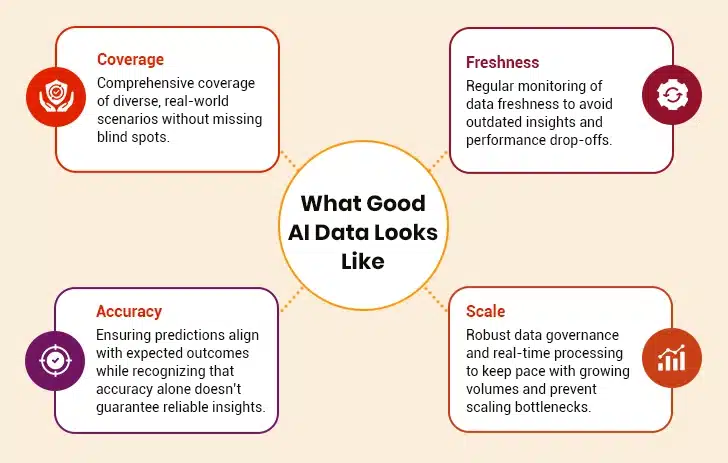

What makes automation successful? A balanced approach. Businesses must pair robust automated collection systems with strong governance frameworks while maintaining human oversight at critical decision points. Data quality remains paramount – comprehensive coverage, real-time freshness, and careful validation determine how effective your AI models ultimately become.

Businesses that thoughtfully address these elements position themselves to overcome traditional data collection bottlenecks that plague so many AI initiatives. Rather than viewing automation as a complete solution, successful organizations treat it as an essential tool within a broader data strategy.

That’s why this measured approach, combined with proper attention to ethical considerations and quality controls, enables truly scalable AI implementations that deliver lasting business value. With the right strategy, companies can unlock the maximum potential of their AI investments while maintaining the data quality foundation necessary for ongoing success.